At Packhelp, we value great software delivering value to our customers above tools and technology itself. In the end, our customers don’t care what programming language we use or if we build our own servers or use a PaaS (Platform as a Service) provider like Heroku, as long as their experience is great.

With above pragmatism in mind, we’ve decided to go with Heroku PaaS offering. From the early days, this enabled us to rapidly prototype, swiftly develop our products, deploy frequently and safely, with little concern about the underlying infrastructure. We’ve been able to sustain massive growth and achieve subsequent milestones and company goals with little operations effort required. That’s the power of a good PaaS.

However, as we grew our codebase, production services count, and the number of developers on the team, we slowly started seeing certain limitations of Heroku. Months later, we’ve begun to realise that PaaS in general would be a sub-optimal solution for us for the long-term.

In this post, I want to share specific Heroku characteristics that turned out to be problematic for us. I hope it provides useful tips for anyone considering a major Heroku (or any other PaaS) migration.

This is not intended as a rant on Heroku. Heroku’s products form a complete and valuable offering that bootstrapped our company to where we are today, and the support was always helpful and responsive. In the world of software and infrastructure, there are no one-size-fits-all solutions. This is more of a collection of observations that might help you decide whether Heroku is a good match for your company needs, current size, and future scale.

In the first section, I’ll highlight the Heroku features we regard as valuable and useful. Then, I’ll go through the issues we’ve encountered one by one, describing them in more detail to provide context for our pain points.

Heroku, the good parts

A world-class CLI. Heroku CLI is one of the best out there. The commands are predictable, discoverable, with well-designed API. They even suggest the right command if you make a typo. All that goodness thanks to oclif, the CLI-building library that Heroku itself open sourced. If you’re interested about all the features that make a CLI great, check out https://clig.dev/.

Out of the box operations capabilities. Any Heroku app automatically gets features that are otherwise costly to get right:

- Viewing and streaming logs (including piping to a collection tool of your choice),

- Rolling back to any recent release,

- Automatic Certificate Management (ACM) - no need to worry about SSL,

- Managing environment variables & secrets easily,

- Logging into the app for quick debugging,

- Basic CPU/MEM metrics and monitoring.

Rich add-ons library. Heroku offers a diverse selection of their own and 3rd party services supporting your applications, including cache engines, databases, monitoring, logging, authentication, and many, many others. This allows building applications out of existing blocks, without the need of setting up and fine tuning them on your own (have you ever deployed your own Elasticsearch instance, or fine tuned PostgreSQL runtime flags?). Furthermore, these add-ons offer powerful, non-trivial features, like DB read replicas or high availability.

Review apps. After a simple setup, it’s easy to make Heroku spawn a fully-featured development/test environment of your app 100% automatically, for every new GitHub pull request, and then deprovision it later when it’s no longer needed. The flexibility and cost-savings this feature provides are immense. Any team that can, should use it.

Other notable features include Heroku CI (although we haven’t used it), automatic deployments, rich access controls allowing cooperation with external developers on a per-project basis, pipelines, extensive buildpacks library, and Docker container support.

Together, this all results in a robust, cohesive product that enforces many good DevOps practices to enable fast flow and reliability of the services (e.g. https://12factor.net/).

Scaling up

We were pretty happy with our PaaS experience – everything was on auto-pilot. Then we grew, adding more and more engineers to our team. We then realised that we needed more development/test/UAT environments so that every developer can independently test and preview their changes in a uniform, consistent, production-like environment. We also expanded our automatic test suite, putting additional strain on our test environments (i.e. we needed more compute power to ensure our tests run quickly).

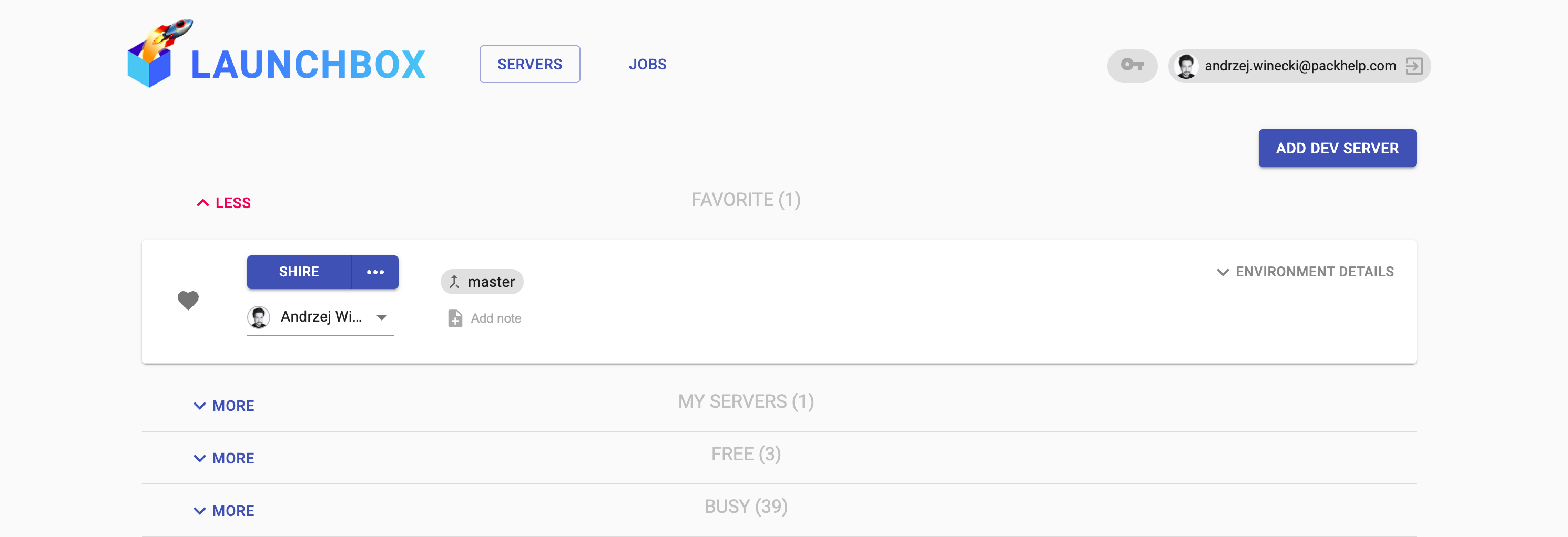

To alleviate the situation, we’ve been considering using Heroku’s review apps feature. Unfortunately, some of our services were too complex to set it up, while for others this would mean making a painful trade-off (see Docker build issues later in this article). This prompted us to automate provisioning and management of dev/test environments in-house. We couldn’t help feeling that we’re reimplementing functionality offered by Heroku due to its limitations in other areas.

Later, as we started to move our apps to containers with an effort to achieve quicker builds, deployments, and improve services’ operations and flexibility, we were faced with additional roadblocks as we started using Docker in Heroku.

Finally, we wanted to improve our apps’ runtimes observability with better logging, metrics, and APM solutions, only to find out that setting it up requires a great amount of hacking on our part and exposed us to nonobvious costs.

Inefficient builds & deployments on Heroku

One of our main applications hosted on Heroku was a Ruby on Rails application. We’ve used a Ruby buildpack to automatically create the production runtime on Heroku.

The build time and size limits

With buildpacks, Heroku enforces strict limits for your application final build’s size, as well as the time it takes to generate it:

First, we’ve exceeded the build time limit in certain situations. Several steps of our production build (e.g. media assets compilation and optimisation) were important, but took a considerable amount of time to process.

Then, we’ve found ourselves dangerously close to the 500 MB build slug size limit for a time, reaching which would mean we could no longer make production releases.

To say the least, we were surprised when we found out. As we didn’t want to prematurely jump on the microservices train without a closer analysis, these constraints felt as if someone was imposing on us an opinionated way to build apps, limiting the software architectures at our disposal. For examples we couldn’t go far with a modular monolith (especially, in the DDD/Domain-driven design context) approach with this setup.

Using Heroku buildpacks (and their shortcomings)

There are two ways to build applications on Heroku: either using buildpacks or building Docker images.

Buildpacks are a good place to start if you’re new to containers. Each major programming language or technology stack has a well-crafted buildpack ready to use. However, as with many things in programming, a solution good for many things is rarely a great fit for the problem at hand. This holds true for buildpacks as well. If you need more control over your app’s build, they lack the flexibility. Even if your app’s core dependencies don’t change frequently, they’re going to be built from scratch during every single deployment, forcing developers to wait (waste of their time) and getting you closer to the 15 minute build time limit.

NOTE: In 2018, Heroku and Pivotal joined forces to bridge the gap between buildpacks and the container world, making OCI-compatible Cloud Native Buildpacks. While I haven’t used them yet, it seems to be a promising alternative. You can read more about this initiative on https://buildpacks.io/.

Heroku Docker support

Thankfully, Heroku supports Docker containers. This is a big step forward compared to the legacy buildpack approach. For starters, the 15 minute build time limit and the 500 MB build size no longer apply. There’s a much higher flexibility when it comes to your runtime – you can define the environment details in a standard Dockerfile (in truth, you can write custom buildpacks to a the same end, but you’ll need to do that in a bunch of .sh scripts instead).

Once you dig deeper, though, and start using Docker in Heroku for production, you’ll find out there are additional limitations:

- You can’t use the review apps feature unless you build your app’s Docker image on Heroku, but..

- If you build your Docker image on Heroku, you can’t use Docker Layer Cache (Heroku starts every Docker build from scratch) – one of the best Docker features.

- Pipeline promotions are not supported.

- Your app’s container has to boot within 60 seconds.

- Images with more than 40 layers may fail to start in the Common Runtime.

Among other limitations, the above confronted us with an interesting dilemma:

Do you prefer to build your applications on your own CI/CD infrastructure and potentially dramatically increase their speed due to DLC (Docker Layer Cache), or accept that builds are going to be slower but use the review apps feature?

We’ve decided we wanted both. We believe that to achieve fast flow and deliver our best work, developers need to be able to build the applications as quickly as possible. Additionally, they should have access to on-demand development/test environments.

Networking limitations (unless you pay extra)

Heroku isolates all applications (and each individual dyno) by default. This is a good thing — we don’t want our apps to talk with unwanted actors (be it our own apps or other Heroku customers’ apps on the same, shared infrastructure). But this means that 2 dynos within the same application cannot talk to each other (unless routed through a DB or a message bus add-on).

On the other hand, the main web dyno is always accessible from the internet. Even if you run your app behind a CDN proxy to guard against DDoS attacks, it’s not possible to disable the *.herokuapp.com URL for your app, leaving it as a potential attack vector.

You can’t contain your apps within a private network (like AWS VPC), at least not within the standard offering. Heroku offers an Enterprise Plan, which includes Private Spaces. Although the enterprise pricing is not publicly available, expect to pay a monthly premium to have access to these features ($1,000+/mo).

Little control over your app’s datacenter location

Similarly to the networking limitations, there are constraints when it comes to deploying apps in specific locations. Unlike major cloud offerings, you can’t deploy an app in a specific datacenter (e.g. eu-ireland-dublin). By default, you can choose either eu or us region (source). If you upgrade to the Enterprise Plan, though, you can specify your app’s region with more accuracy (although the available regions list is still limited).

While this is not a big problem at the first glance, it might become a pressing issue. If you provision a database as a Heroku add-on, it is guaranteed to reside next to your application in the same datacenter. However, consider that you want to use a DB provider that’s not enlisted as a Heroku add-on option. Even if you can control the DB’s region and set it to eu-ireland-dublin, you can’t guarantee that your Heroku app in eu region will be deployed in Dublin, Ireland. This has important implications when you’re optimising for low latency, potentially forcing you to use technologies that are available as a Heroku add-on exclusively.

Using non-standard technologies (not in add-ons)

If the above limitations don’t raise your eyebrows, let me demonstrate our struggle to connect the APM of our choice, Datadog, to our RoR application.

Previously, we were using New Relic to monitor our production application performance. It worked out of the box as a Heroku add-on. Then we’ve made a decision to commit to another provider – Datadog.

We’ve soon realised that there is no Datadog add-on available in the Heroku add-ons marketplace. But that didn’t stop us. Since our application was containerized, we’ve decided to embed the datadog-agent within the final app’s Docker image. We’ve added Datadog as a background process starting with the app (as there are public buildpacks doing the same thing, I reckon we were not the only ones to hack our way into making this work).

After a time running this setup though, we’ve realised that this approach has serious drawbacks:

- Once we scaled our main app’s dynos, each of them spawned their own

datadog-agentcollecting metrics (remember the isolation feature? There’s no other way to instrument apps if you scale it across multiple replicas). - Since Datadog charges for each host instrumenting APM apps, we were initially billed separately for each dyno of our app. Even worse, as Heroku switched the hosts between deployments, we were charged even more than for the no. of dynos we had running in production. We’ve since figured this out with Datadog support, but it was a bitter surprise.

- It’s incredibly wasteful. Datadog’s architecture is clever and efficient – once you install

datadog-agenton a single host, it’s performant enough to asynchronously collect metrics from many services. It was obvious that scaling up with this approach would lead us to pay for additional, unnecessary compute resources.

In hindsight, we’ve realised that there’s a big missing piece in the Heroku PaaS model – there is no Pod concept.

A Pod is a Kubernetes primitive that means running a group of containers closely together, while isolated from other Pods. While going into this and other container orchestration concepts is outside this article’s scope, understanding this idea provides a good perspective on the pitfalls of Heroku Docker runtime.

The ability to run several containers together and have them talk to each other via local network allows using any setup and technology you need. In addition to that, sometimes it’s useful to run a single instance of a process for every single host, but not for every application – the case of datadog-agent background process collecting metrics from multiple services. Kubernetes implements precisely this idea with DaemonSets. Similar capabilities are possible without a full blown orchestrator like Kubernetes; for example with Docker Compose or Docker Swarm.

Cost effectiveness

The final long-term roadblock for us was Heroku’s pricing. Even without upgrading to the Enterprise Plan, maintaining more than a handful of services with numerous short or long lived development/test environments that mimic production, can become expensive.

Within the Standard and Performance dyno types, the costs mostly depend on the RAM amount your application dyno requires to run. Here’s the breakdown:

Standard 1X: $25 – 512MB ~ $0.049/MB

Standard 2X: $50 – 1GB ~ $0.049/MB

Performance M: $250 – 2.5GB ~ $0.1/MB

Performance L: $500 – 14GB ~ $0.036/MBThe first thing to notice is that there’s little flexibility here. Once your app hits more than 1GB RAM, you need to pay 5x more. And after you hit 2.5GB, there’s an additional 2x increase.

Let’s compare this to a standard AWS c5.2xlarge instance (8vCPU, 16GiB RAM):

On-demand monthly cost: $283.24/mo

RAM capacity: 16GiB

$283.24 / 17,179MB ~= $0,016/moIn comparison the AWS c5.2xlarge instance price, Heroku Standard 1X or 2X dynos cost 3,0625x, Performance M costs 6,25x, and Performance L costs 2,25x. Let’s keep in mind, that I’ve used AWS on-demand price here, which is on the more expensive side. If you use a reserved instance, or another, cheaper cloud provider, it’s not difficult to decrease these costs by 30-50%.

The Heroku’s premium prices are expected. They provide a great deal of useful features embedded in their platform and that’s valuable. However, a more subtle issue here is the inflexibility of the pricing. Imagine you’re running 30 instances of your app on average (dev/test/UAT environments). The apps run OK for a long time, well below 1GB RAM. Slowly, the app uses more and more memory, finally crossing the 1GB mark. Suddenly, instead of $50 x 30 = $1,500/mo, you’re paying $250 x 30 = $7,500/mo. That’s a $6k/mo increase over a tiny amount of additional RAM used!

In reality, Heroku doesn’t kill your app if you go 1MB over the limit. They will start killing your app with OOM (Out Of Memory) errors only after you cross the threshold by a considerable amount. Among other reasons, this prospect forced us to consider other container runtimes (like Kubernetes) to reclaim control over our compute resources and costs.

Closing thoughts

It’s hard to write a universal guideline for choosing a particular infrastructure or production runtime. As application developers, we should be happy to have a wide range of options to run our apps. Neither of them is inherently bad (except for the overpriced shared-host PHP hosting from the ol’ days).

Deciding what cloud vendor or platform to use depends on many complex factors. To name a few:

- What production environments do you have the most experience with (do you have dedicated operations staff?),

- How big is your budget (and how cost-effective do you need to be),

- How much capacity do your services require,

- How complex are your applications’ runtimes,

Nevertheless, there’s noting like a battlefield report from a real-world company. I hope this post acts like one, providing hints for tech companies considering going into or out of the PaaS world.